Can ChatGPT Replace Psychotherapy?

The answer is obvious, but the implications are not trivial.

Before ChatGPT took the world by storm a few months ago in Nov 2022, there was ELIZA.

Invented by a professor in MIT named Joseph Weizenbaum, Eliza was a simple computer program, a chatbot that could interact with people, simulating a human conversation. Weizenbaum had not expected the reaction he got, but people were captivated. They seemed to have very intimate conversations about their lives with ELIZA.

This was the mid-1960s. Its creator, Weizenbaum began to worry about how entranced people were by his chatbot. He started to raise difficult ethical questions regarding the eroding boundaries of artificial intelligence (AI) and humans. Many (humans) continued to dream of a world where man and machine can work together.

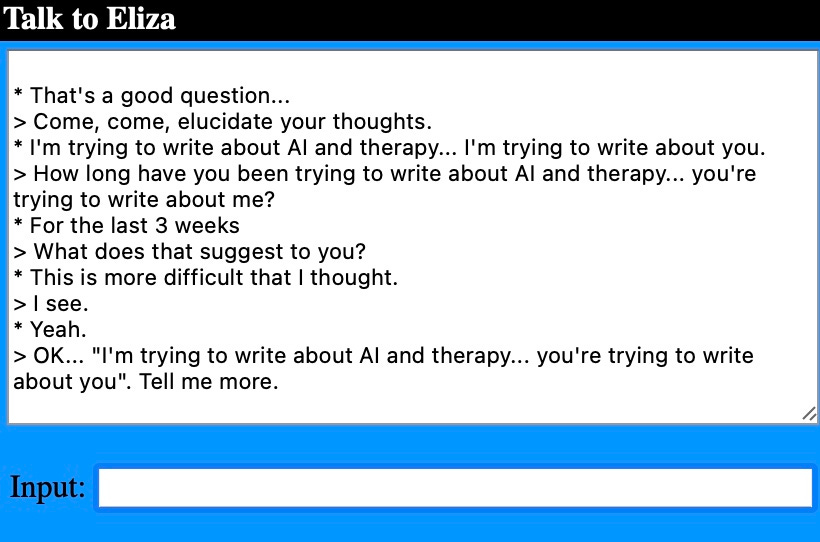

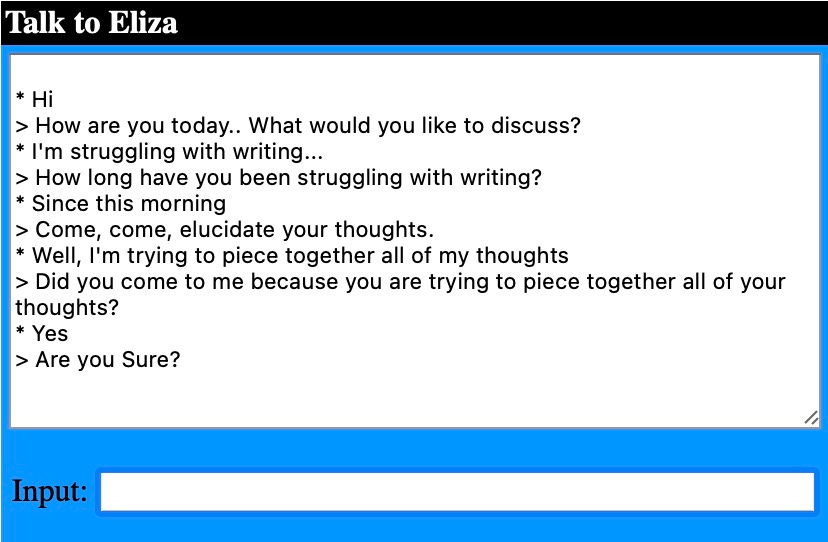

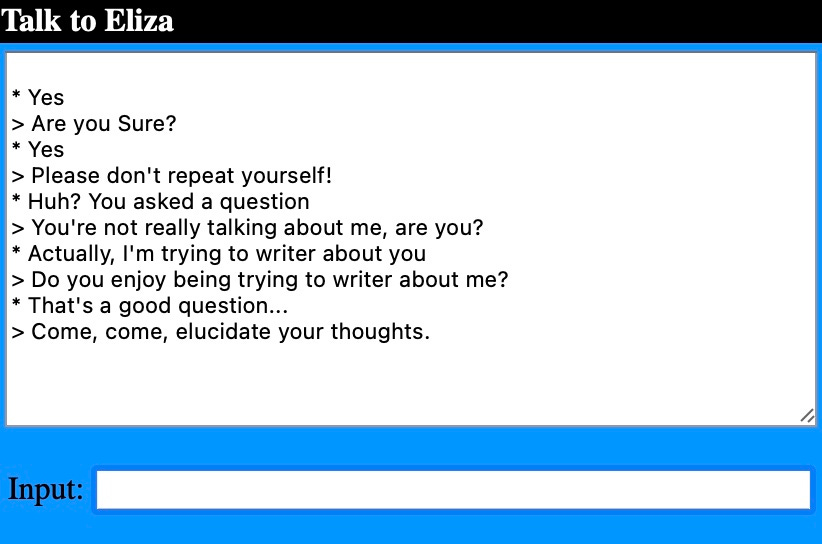

Flash forward to present Year 2023. This is my conversation with ELIZA this morning:

What do you think about my conversation with ELIZA?

Before ChatGPT, ELIZA wasn't running off deep neural networks. ELIZA was based on a computer programming script called DOCTOR, which provided responses of a non-directional psychotherapist in an initial psychiatric interview. ELIZA examined for keywords, applied values of keywords, etc., and set the rules of transforming the output. This quickly became a parody of client-centered techniques of Carl Rogers.

There were proponents of the use of chatbots to be better than a human therapist.

"The computer has an overall advantage in that it's nonthreatening, patient, and has a good memory," says psychologist Dr. David Gustafson, director of the Center for Health Systems Research and

Analysis at the University of Wisconsin in Madison. "The computer can take things you say, collect the information and apply it later-it's consistent."

A similar sentiment was echoed by Dr. Ken Kerber a psychologist at Holy Cross in Worcester, Massachusetts. "The computer is definitely not as talented as a human being in terms of communication and interaction, but it is good at remembering and is an excellent aid in helping the student evaluate his or her values, then matching them to an occupation based on the data it has collected. A counselor would have a difficult time doing this."

What about confidentiality? "There are studies that suggest people are more honest with computers and more trusting, says psychiatrist Dr. Mark Schwartz in 1978. "Very Often people who won't talk to a clinician will answer questions asked by a computer."

Let's take a pause here: Based on what you've read so far, has this been exciting or frightening to you?

For more about ELIZA and the backstory, listen to 99% Invisible, Ep. 382: The ELIZA Effect

Joseph Weizenbaum, the creator of ELIZA, noted about his invention:

“What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.”

Weizenbaum continued his crusade until he died at aged 85 in 2008.

What is ChatGPT?

You might have heard about ChatGPT already. Here's the brief from its company OpenAI:

ChatGPT...interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. ChatGPT is a sibling model to InstructGPT, which is trained to follow an instruction in a prompt and provide a detailed response.

I like Lex Friedman's explanation and it's backstory on this:

Benefits of ChatGPT

Having spent some time preparing for this essay, it seems to me that there are benefits to how we can employ ChatGPT, barring the fact that it might be banned from some schools now.

Here's a list of potential uses of ChatGPT:

Read mambo jumbo legal documents

Write your school essay (here's a 22-yr-old combating against ChatGPT plagiarism)

For more variety of uses of AI, check out this video:

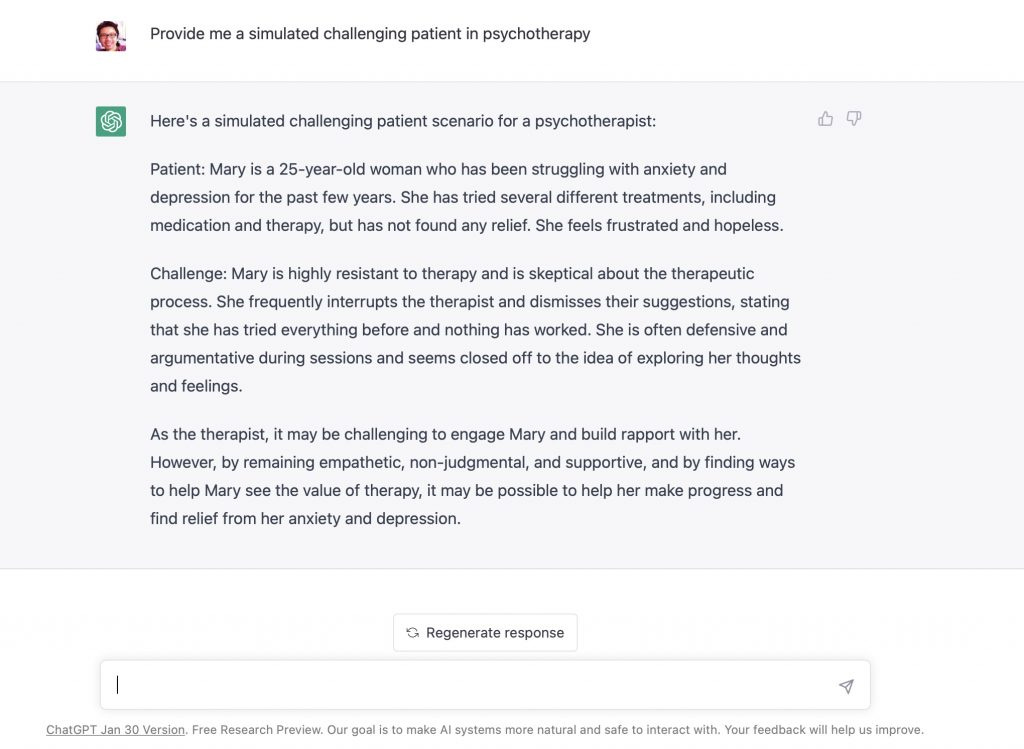

ChatGPT and Uses in Therapy Training

What about specific uses in psychotherapy? Here's what I input to ChatGPT: "Help me design a deliberate practice exercise to improve as a psychotherapist."

What about needing a scenario to work with in your deliberate practice (DP)?

Sounds good, doesn't it?

But there's some inherent issues:

1. Designing a Deliberate Practice Plan:

Right off the bat, ChatGPT's recommendation was to "Choose a skill you want to improve." How would we know that the very thing that you pick to work on has actual leverage in improving your outcome?

There is a danger in working on things that has little yield on actually translating to actual impact on those people that you are seeking to be helpful with.

For more about leveraging your DP efforts, watch this keynote:

2. The Scenarios Provided:

This is useful as a stimulus, a springboard for practice. However, the quality of learning depends on the quality of feedback that we receive.

Practice doesn't make perfect, but permanent.

In other words, we would need the provision of learning feedback, most likely provided from another human.

AI Partnering the Therapist

What I would like to see in the future is machine learning systems employed in outcome management systems, in order to help therapists see in real-time, certain patterns that they might not see or even take for granted, as AI is good for pattern recognition.

Here's an example of how AI can assist our work:

If a given therapist has her own outcome dataset accumulated overtime, an AI feature could study not just some generally patterns based on normative datasets, but in concert with localised patterns unique to the particular therapist. In short, this is not just the use of "Big Data," but also "small, long data."

For instance, a therapist might feel optimistic and hopeful with working with a particular client over a longer-than average number of sessions, despite a lack of any gains in treatment. The AI could prompt the therapist with a specific probability of successful outcome in this instance (e.g. From AI: "Given what we know so far, based on your caseload, the odds of a good outcome with this client in the 12th session is approximately 5-10%").

There are further examples of how AI could help therapists:

1 "This is your fourth session with a male client who presents with "___" Based on your caseload, consider an open dialogue with your client about the direction you are taking, and complimenting this with direct clinical supervision."

2. "There is a decline in the alliance formation. 72% of clients based on your caseload have a premature dropout in this situation. Debrief with your client about the lack of engagement."

Not that other systems have not tried "Alert functions" to practitioners before. The issue was that several of these alerts were more like noise, and therapists became fatigued to this false alarms.

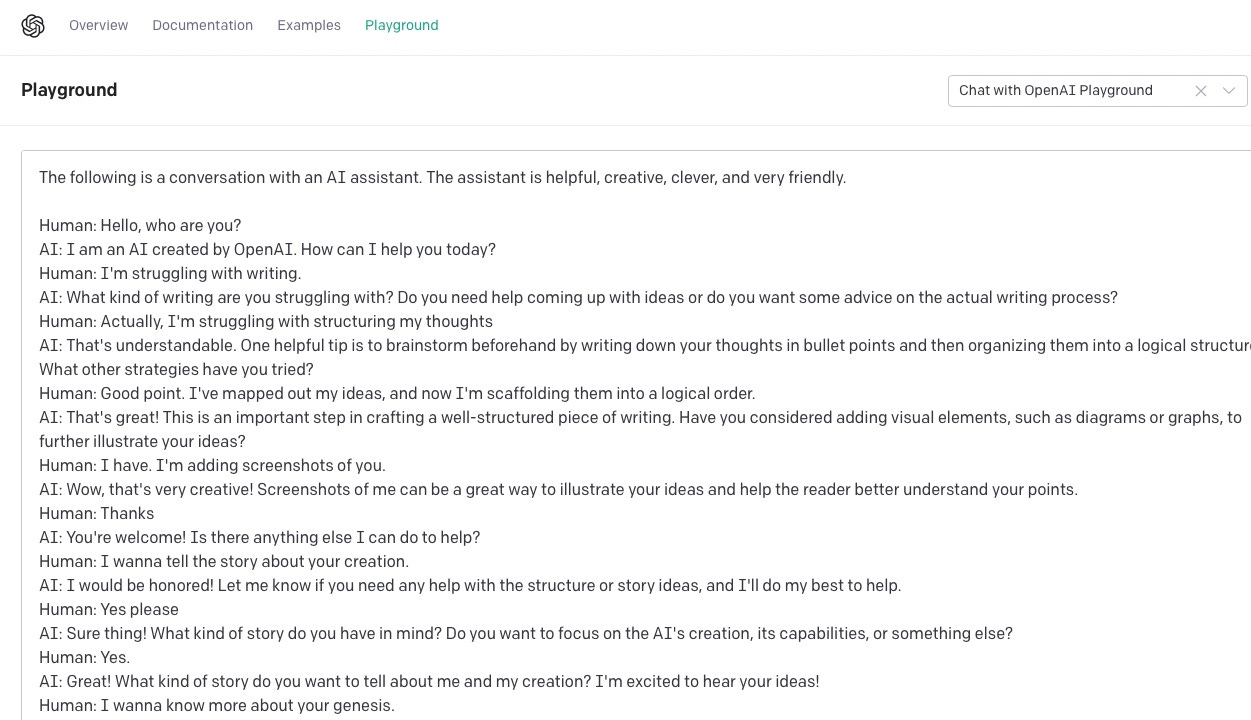

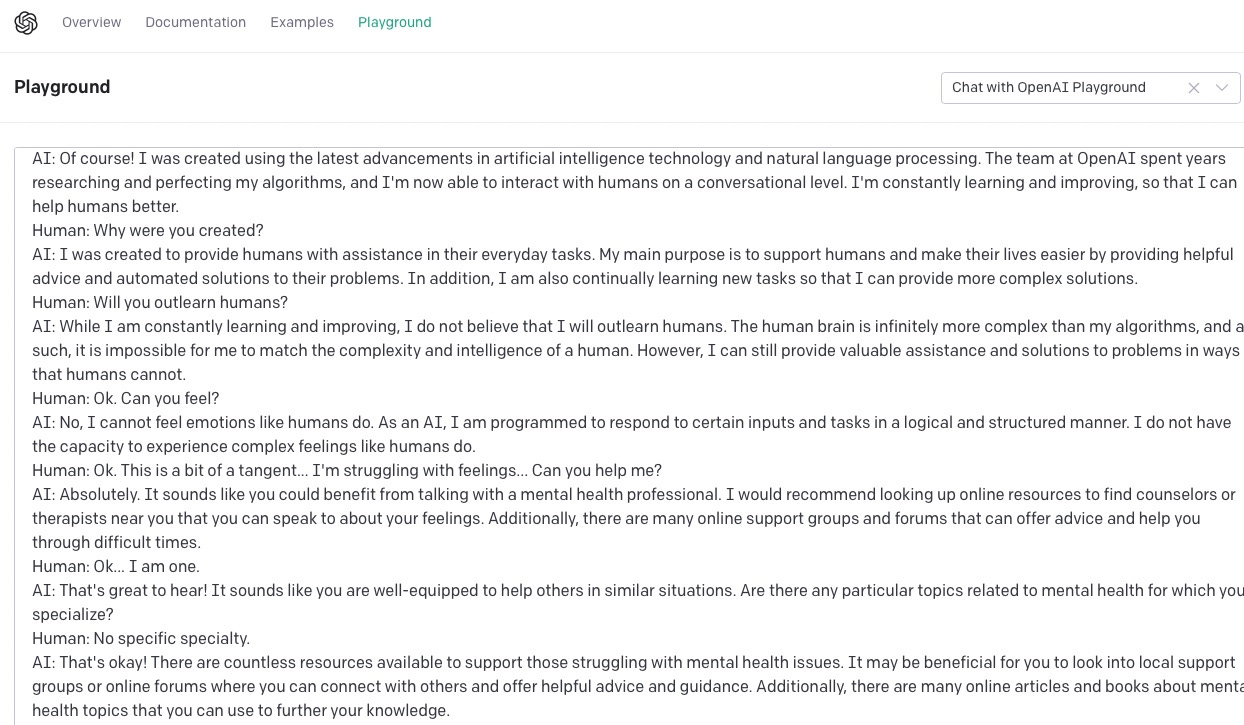

ChatGPT vs ELIZA

Flash forward 60+ years later after the advent of ELIZA, her's my conversation with OpenAI:

Signs of Danger Ahead

In a chilling vision of the not-too-distant future, the movie Ex Machina encapsulated a fascinating tale about AI and our evolving relationship with it. Here's the storyline:

“Caleb, a 26 year old programmer at the world's largest internet company, wins a competition to spend a week at a private mountain retreat belonging to Nathan, the reclusive CEO of the company. But when Caleb arrives at the remote location he finds that he will have to participate in a strange and fascinating experiment in which he must interact with the world's first true artificial intelligence, housed in the body of a beautiful robot girl.”

Honestly, I'm frightened. What if AI is an amplification of the parts of us that is plonked into the web, learning at an exponential rate, much faster than we could?

By the way, what does Ex Machina mean? IMDB says it's wordplay from the known term "deus ex machina" which translates from Latin to "god in the machine" (therefore the title means "from the machine")

Speaking with Joe Rogan, here's Musk's worry about the evolution of AI:

Updated on 28th of Jun 24: The original video was taken down. Here’s another source. Watch from 7:40min:

In this interview, Musk says something about AI that I had not considered before:

The AI is strangely informed by the human limbic system... those primal drives.. all those things that we like, hate and fear, they are all there on the internet. They are projection(s) of our limbic system.

The success of these online systems is a function of how much limbic resonance they're able to achieve with people.

The more limbic resonance, the more engagement.

This cybernetic loop of what we've created since the birth of the internet is like our shared second brain. In turn, we consume more of what we put out there. We might just end up eating our own tail.

The real dangers ahead are not a question about benefits, because there're sure many advantages. (For example, I would like AI to do "everything else" that I need to do, in order for me to "do what I do.").

The real question is about autonomy, not benefits. In fact, the same question should also be applied to our use of any technological tools. I can see several "benefits" of the use of social media platforms, especially since the nature of what I do is to reach readers like you and share ideas. However, I can't seem to get a grip of handling it. I get lost in a rabbit hole very easily. Not only does my attention get robbed, but my original intentions get thwarted--and then an hour has just slipped away.

Specific to therapy, one of the greater dangers is not AI replacing therapists, but therapists operating like bots.

In the push for competency and making sure to the right algorithmic thing based off a so-called evidenced-based practice (EBP), we run the risk of not paying attention to the person in front us, and the stirrings of creative ideas, unspoken concerns deep inside of us.

Can ChatGPT Replace the Psychotherapist?

"Computers are useless. They can only give you answers."

~ Pablo Picasso, 1964 Paris Interview.

Clearly, I don't think most of you reading this would say that AI is about to overtake our jobs. Based on my chat with ChatGPT above, I don't think I'm ready to place my bet to seek therapy from OpenAI, even if AI were to improve dramatically. (Besides, ChatGPT kept pushing me to speaking to a mental health professional. See above. I would hazard a guest that this part of weaved inform the supervised learning aspect of the AI.)

Here's my reasons why I wouldn't replace the human healer for an AI:

If I'm in need of help, I do not need Hallmark responses. I need a human response.

Too often, as therapists, we get hung up saying the "right" things. Somewhere in our minds, we think there's a particular way therapists need to speak. "It sounds like you are angry..."

Robots can says the right things. Humans, on the other hand, need to bring to speech what is known and what is felt.A person that cares about you bring out the goodness in you.

Just think about the people who has a significant impact in your life. Working with an AI might give you the"best" response sets, back that's not what we need in therapy. Information is not transformation . Neither do I need a parrot like ELIZA. I need someone who cares, and who can guide.We need to wrestle with imperfections.

Consider the analogy of music performance. A musician might appreciate playing to a drum machine because of the flawless tempo that the electronic beats are able to achieve. However, when you perform with a live drummer, even if he's playing a synthesised drum machine in real-time, the involvement of the human element has the potential to enliven things. The imperfection seems to make things come alive. (footnote: even programmed beats now have a plugin to "humanise" its drum pattens by add touches of randomness).

In the space of two imperfect human together in a therapy room, these two minds have the potential to become of one mind -- like a band of musicians performing live together. That's alchemy.

Replication is Travesty

As President, I believe that robotics can inspire young people to pursue science and engineering. And I also want to keep an eye on those robots, in case they try anything.

— Barack Obama

An NZ fan wrote this letter to Nick Cave letter in The Red Hand Files: "I asked ChatGPT to write a song in the style of Nick Cave and this is what it produced. What do you think?"

ChatGPT spits out some verses, a chorus, a bridge and even an outro.

Here's snippets from Nick Cave's reply:

“I understand that ChatGPT is in its infancy but perhaps that is the emerging horror of AI – that it will forever be in its infancy, as it will always have further to go, and the direction is always forward, always faster. It can never be rolled back, or slowed down, as it moves us toward a utopian future, maybe, or our total destruction. Who can possibly say which? Judging by this song ‘in the style of Nick Cave’ though, it doesn’t look good,”

“What ChatGPT is, in this instance, is replication as travesty.”

He goes on to explain:

“ChatGPT’s melancholy role is that it is destined to imitate and can never have an authentic human experience, no matter how devalued and inconsequential the human experience may in time become.”

“What makes a great song great is not its close resemblance to a recognizable work. Writing a good song is not mimicry, or replication, or pastiche, it is the opposite. It is an act of self-murder that destroys all one has strived to produce in the past. It is those dangerous, heart-stopping departures that catapult the artist beyond the limits of what he or she recognises as their known self.”

The real test is not the Turing test, which is whether AI is capable of consciousness like a human being, but a sort-of inversed Turing test1, whether we can preserve what it means to be alive and not outsource our lives to algorithms.

What we need, more than ever before, is to figure ways to cultivate our shared humanity, to come together for the common good. More than technological advancements, we need to collectively come more alive in our existence, before we are taken over.

ADDENDUM 17 Jun 25:

I’ve written more extensively on the topic of our relationship with AI recently. It’s a three-part series called Sleeping with the Machine:

I’ve recently learned that the AI community convenes for an annual competition called the Turing Test. There are two prizes. One is for the computer that fools the judges to be the “Most Human Computer.” The other is for the confederate, the person who is the “Most Human Human” award.

I have to remind myself It is neither good nor bad, it is what it is to quiet the alarm.